VR Hand Tracking Application + Research Study

Question: Most commercial virtual reality applications with self avatars provide users with a “one-size fits all” avatar. How do variations in avatar hand size affect user experience?

GOAL

Design a research application and publish finding evaluating how user experience, task efficiency, and feelings of body ownership are influenced by varying hand sizes and input modality.

PARTICULARS

Role: VR Designer & Research Scientist with Meta Research | 8-person research and development team | 2017-2019

Tools: Unity, Maya, Git, Adobe Creative Suite

Technology: Oculus Rift and Touch controllers, Online Optical Marker-Based Hand Tracking, PC

SUCCESS

Case study led to published findings contributing to knowledge in designing virtual avatars. Application is currently being used in ongoing case studies.

Let’s make a fun research experience!

Meta Research was looking to test their latest motion capture hand tracking technology. I incorporated it into a VR application and publication about how to best design avatar appearances.

Many of the participants would be experiencing VR for the first time, so I also wanted the application to be an engaging and memorable experience. To facilitate interaction with the virtual environment and focus on the appearance of the avatar hands, I chose to design the research application as a puzzle game in which users assemble toy blocks to create engaging sculptures.

From Paper to Prototype

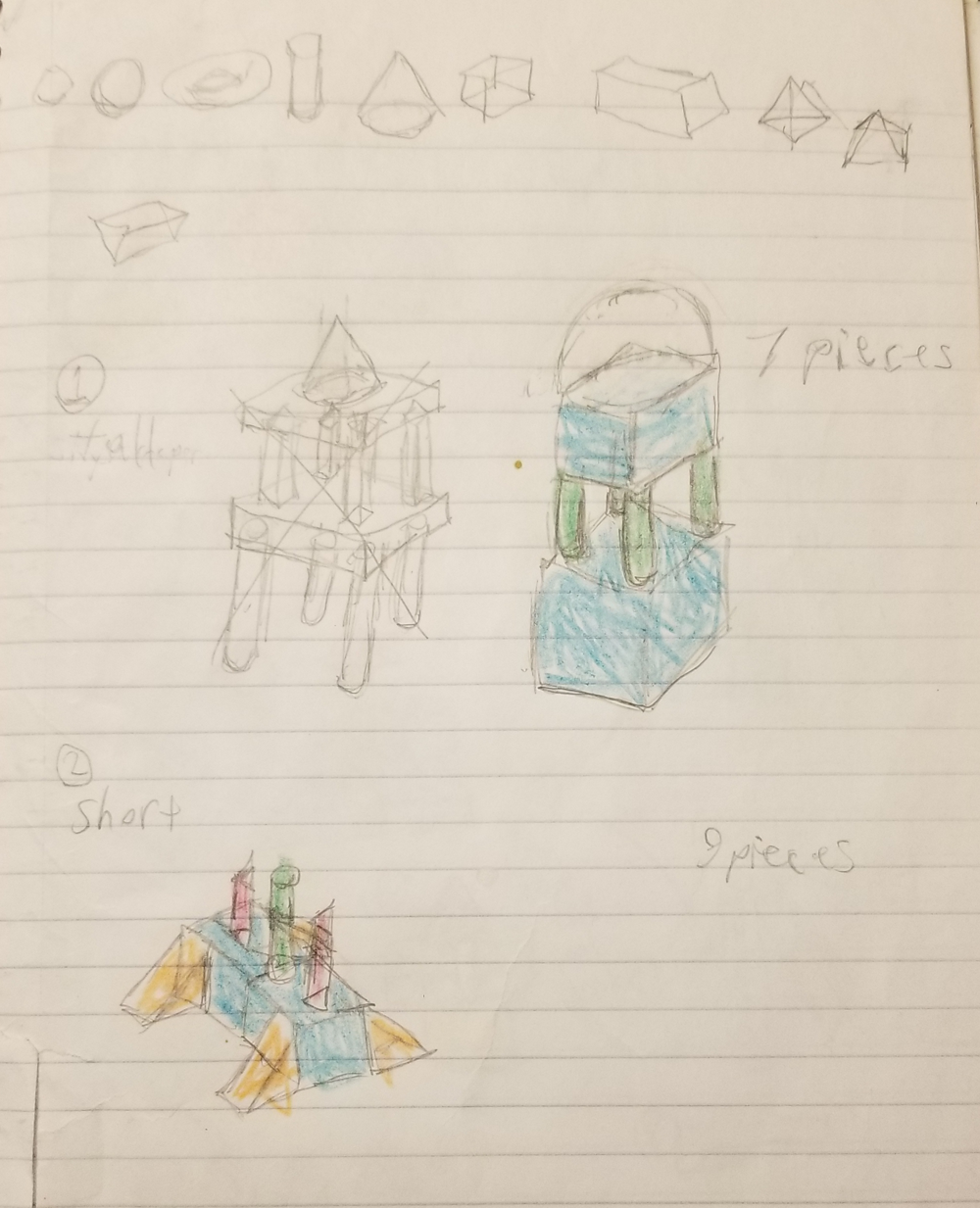

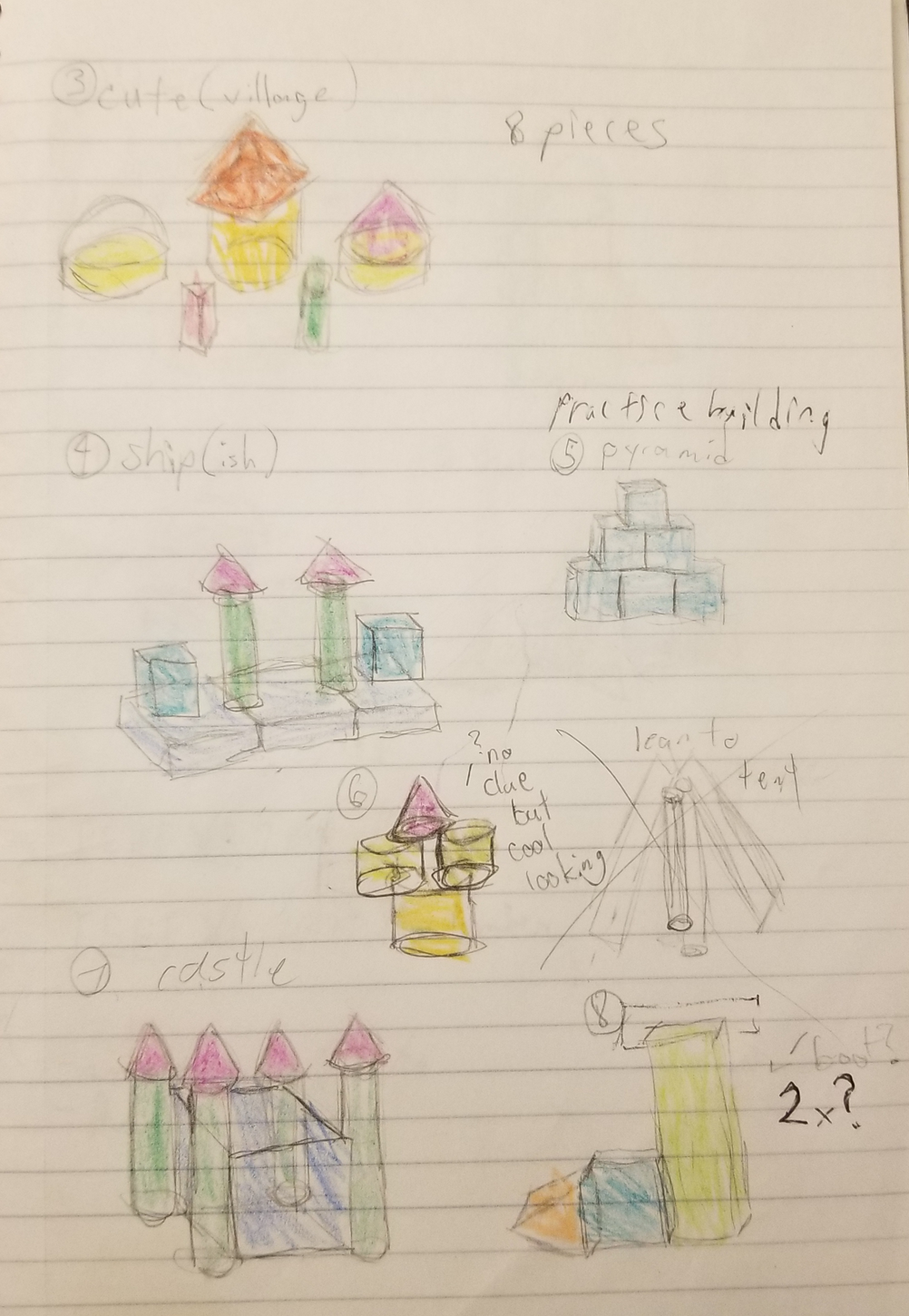

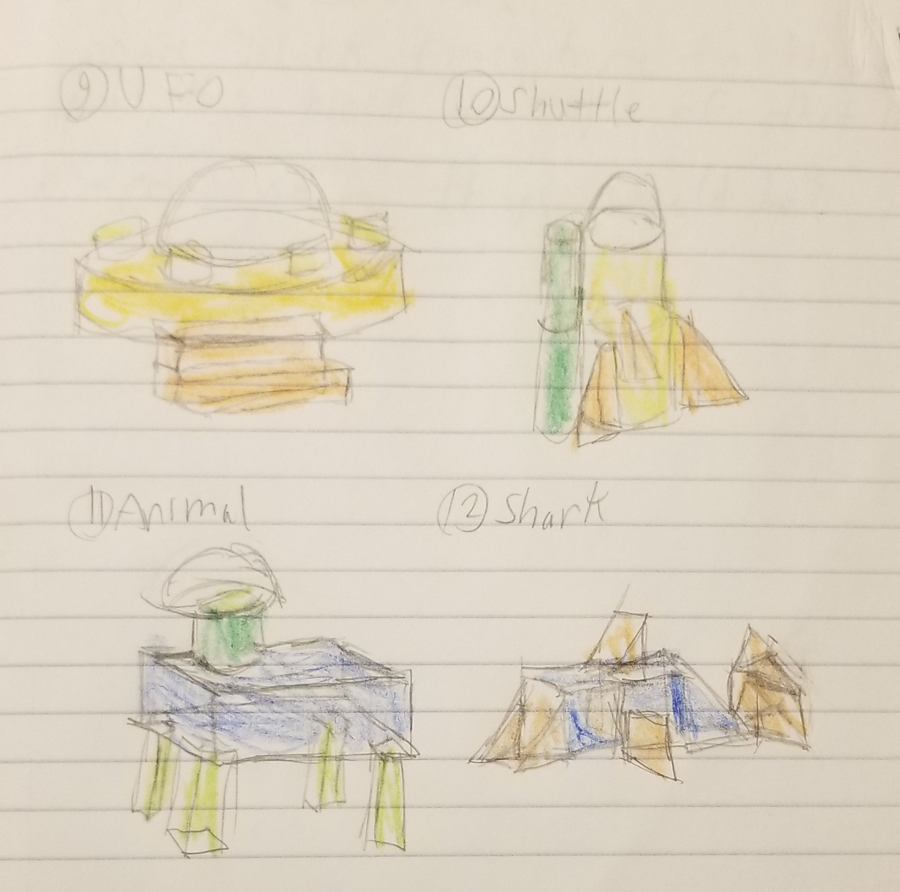

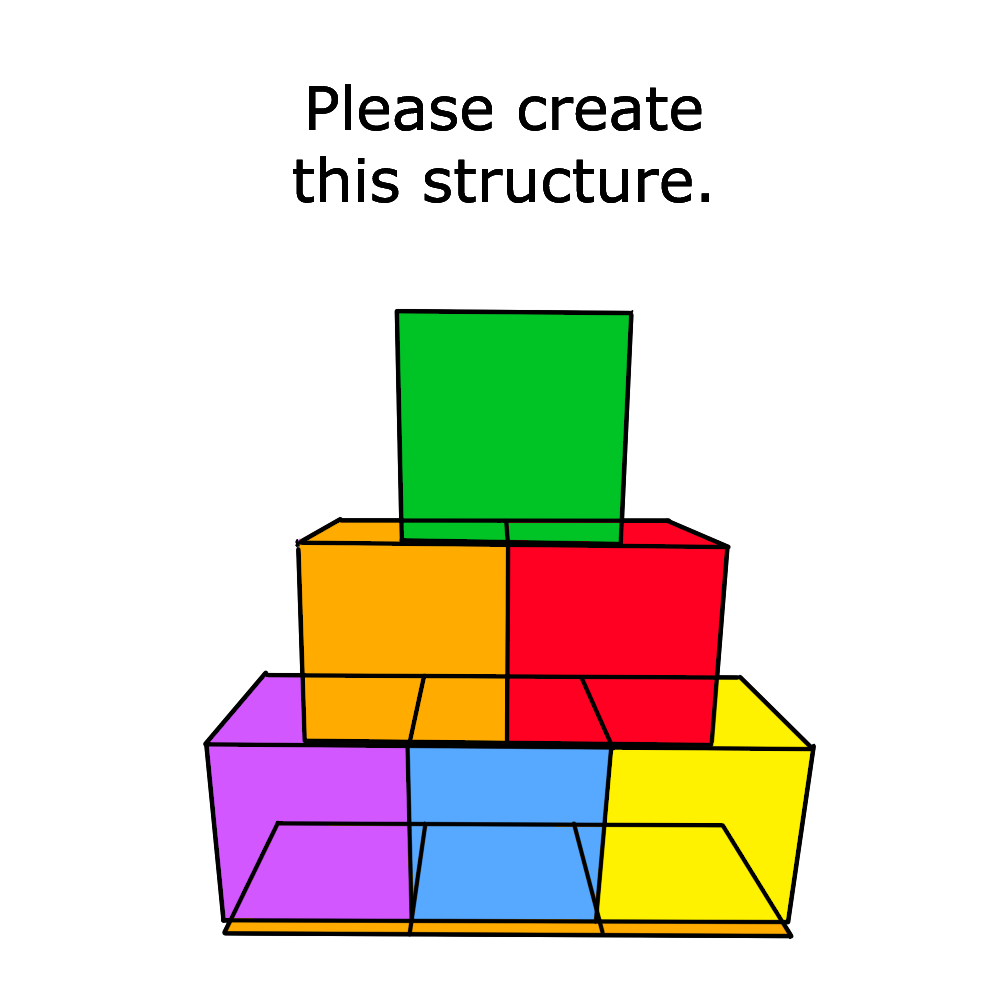

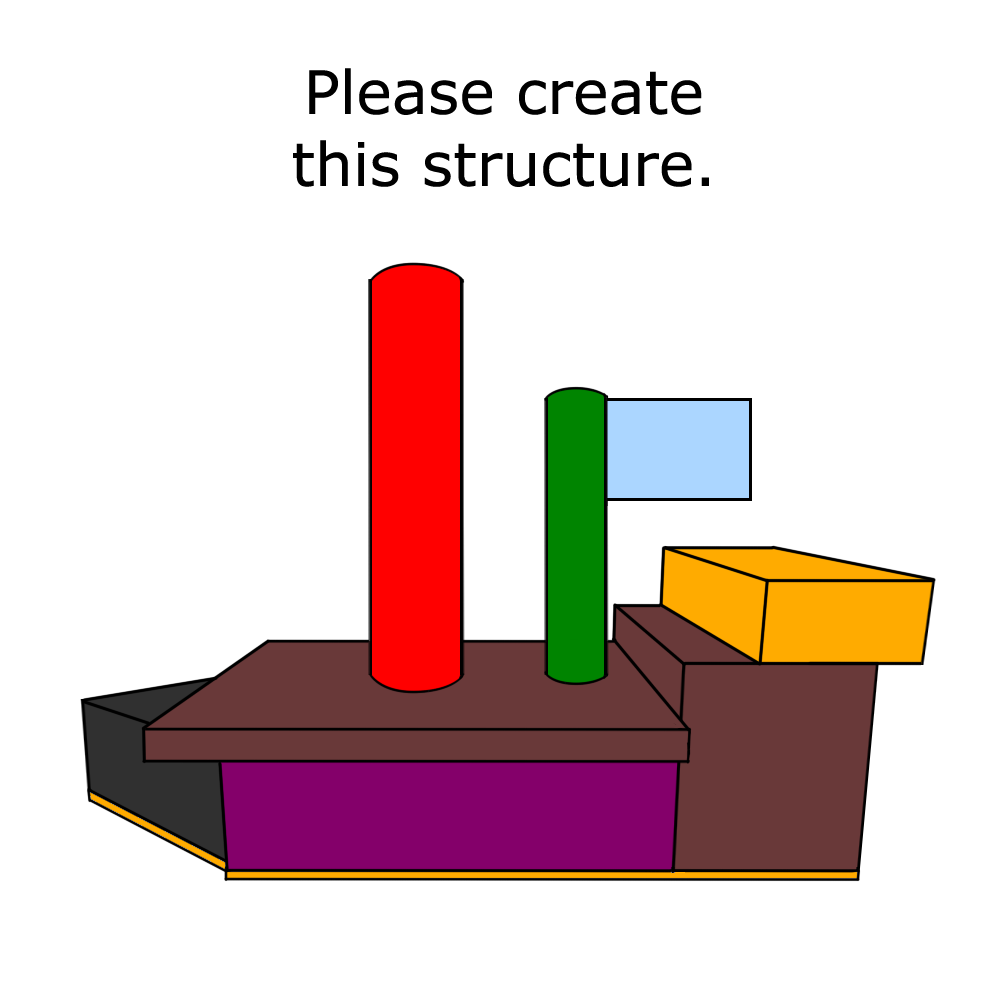

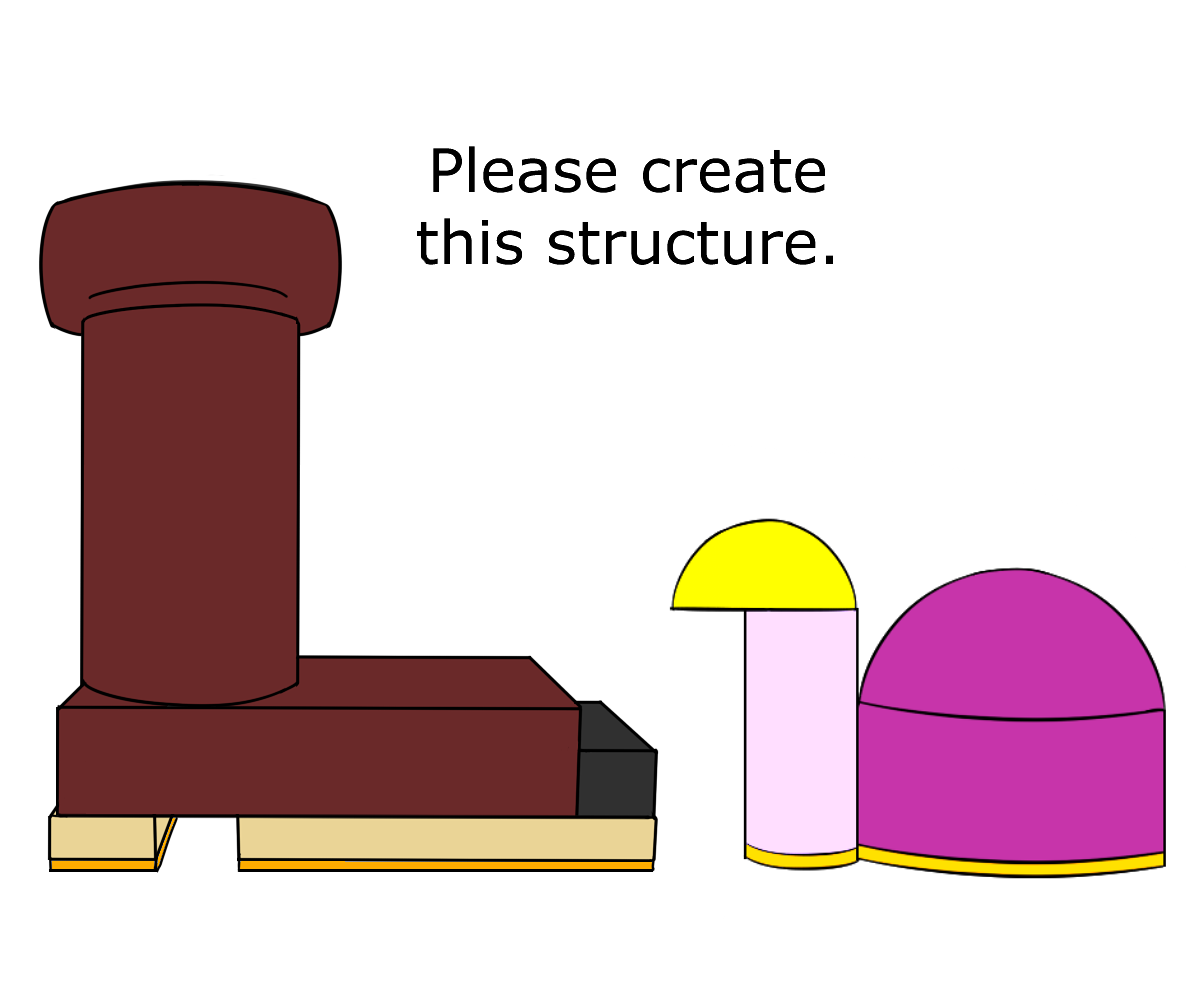

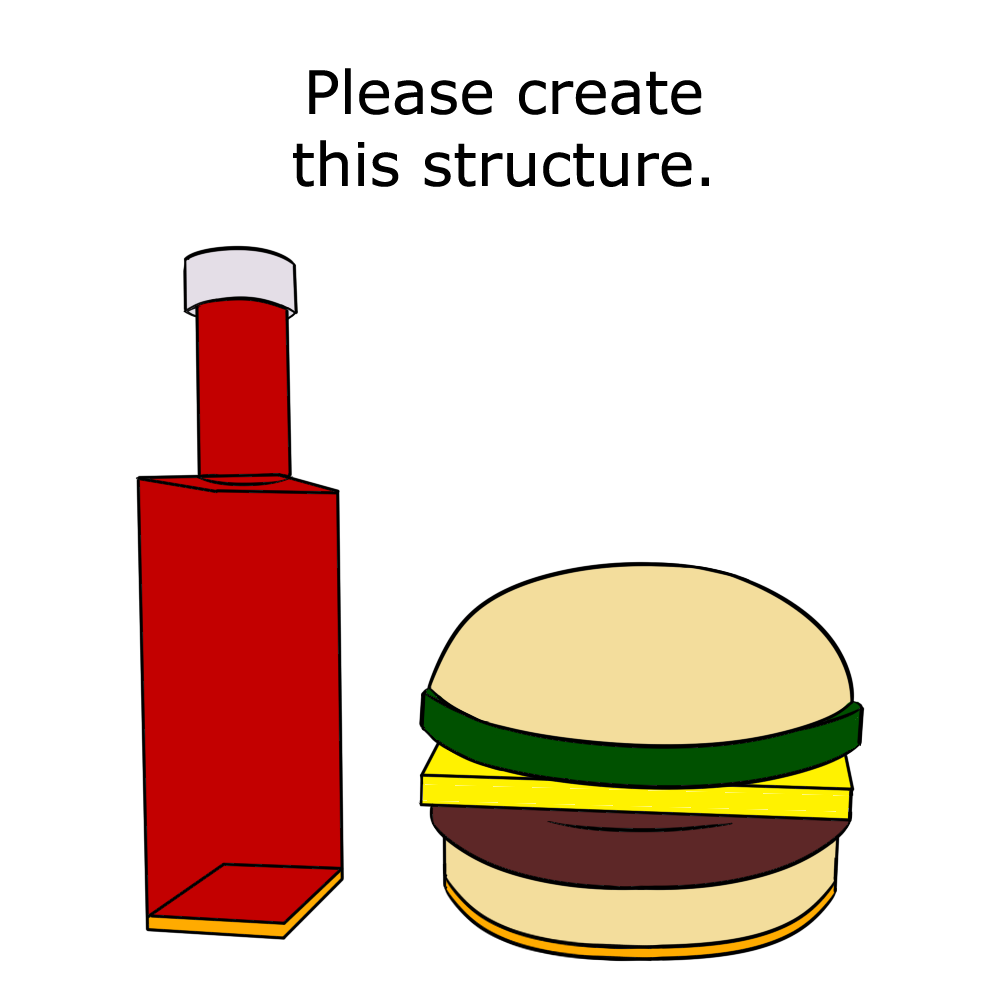

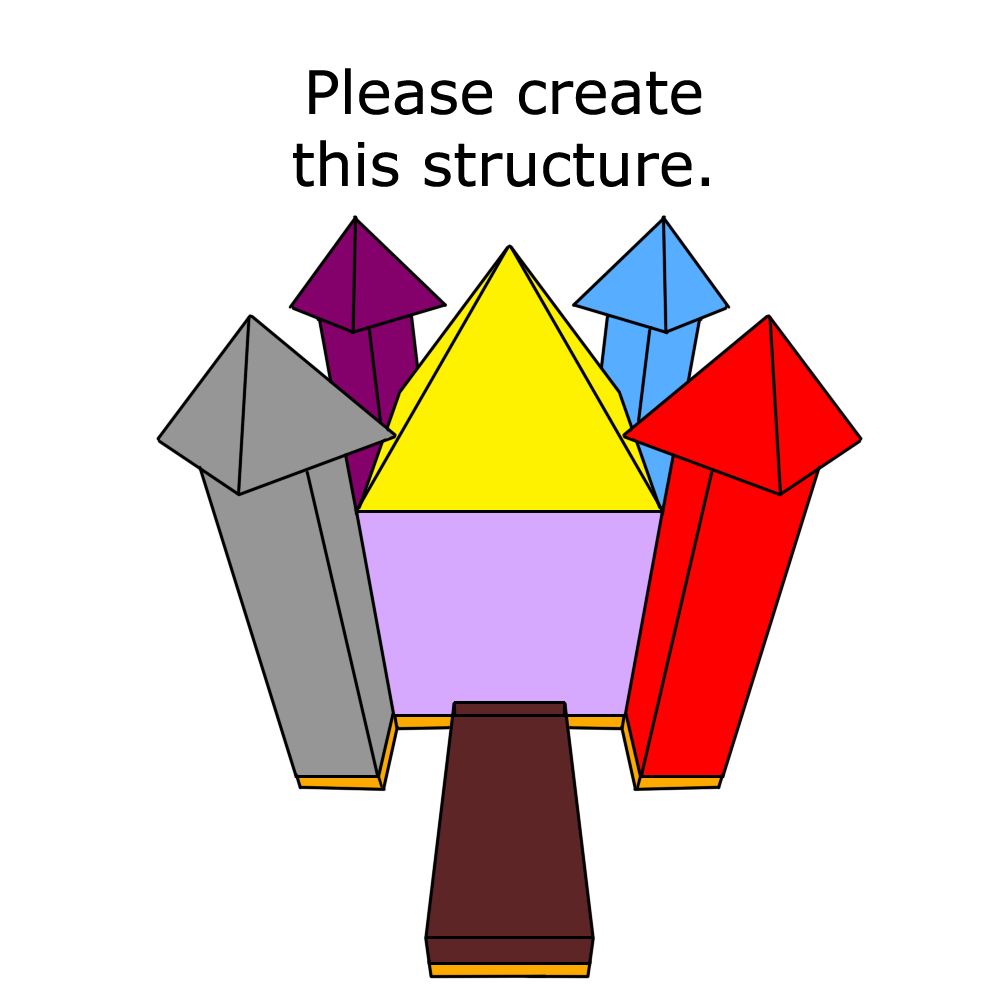

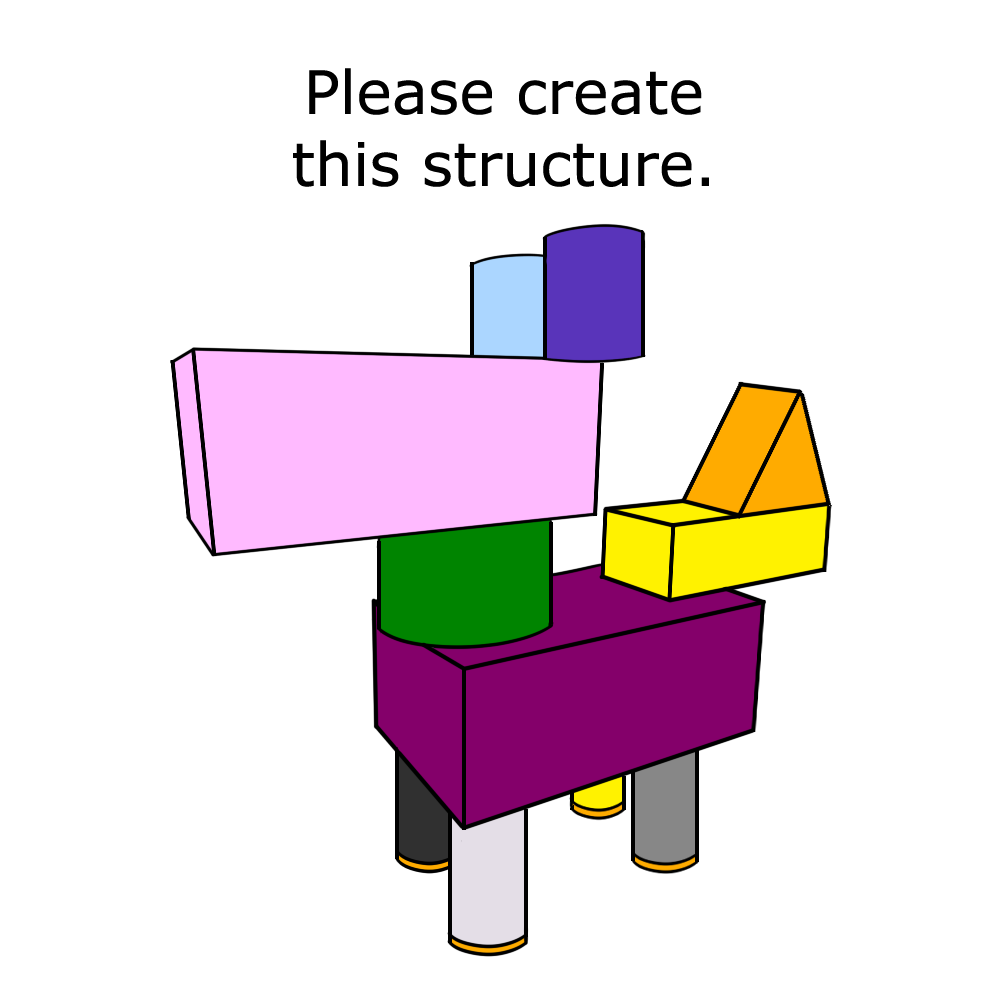

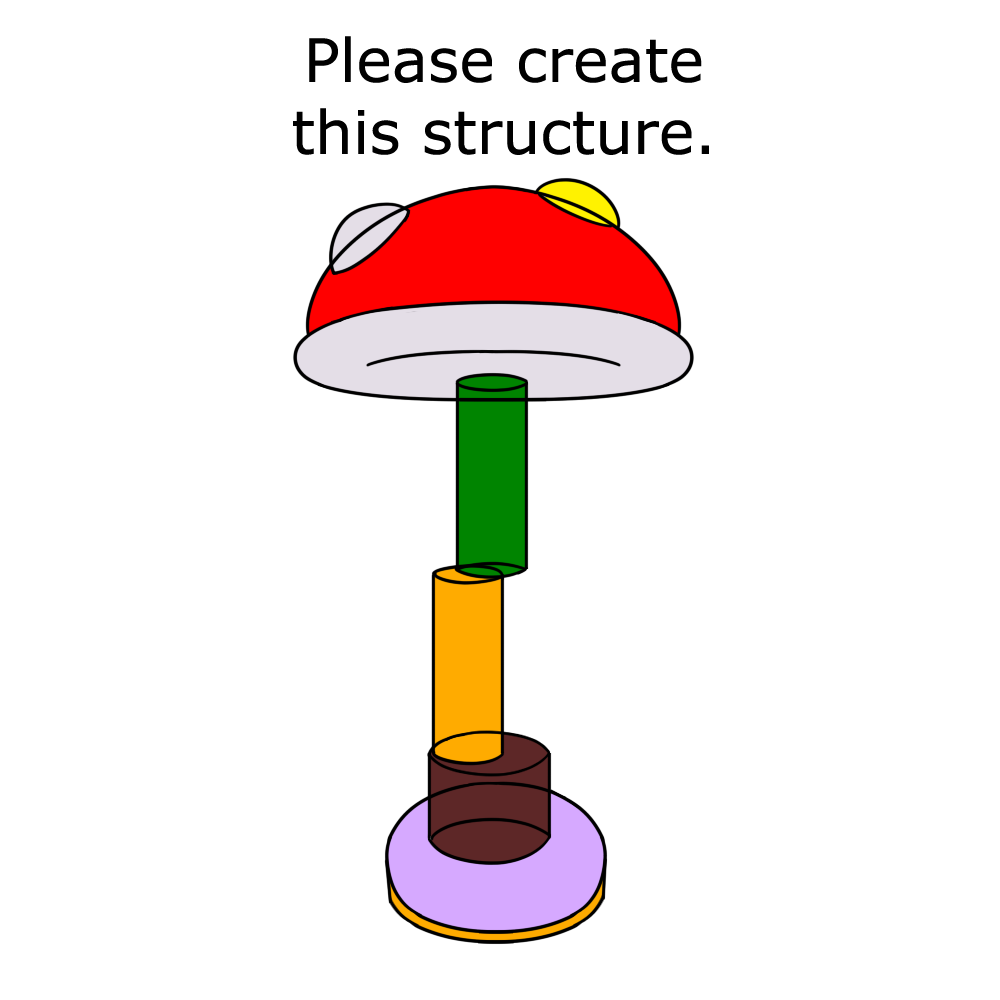

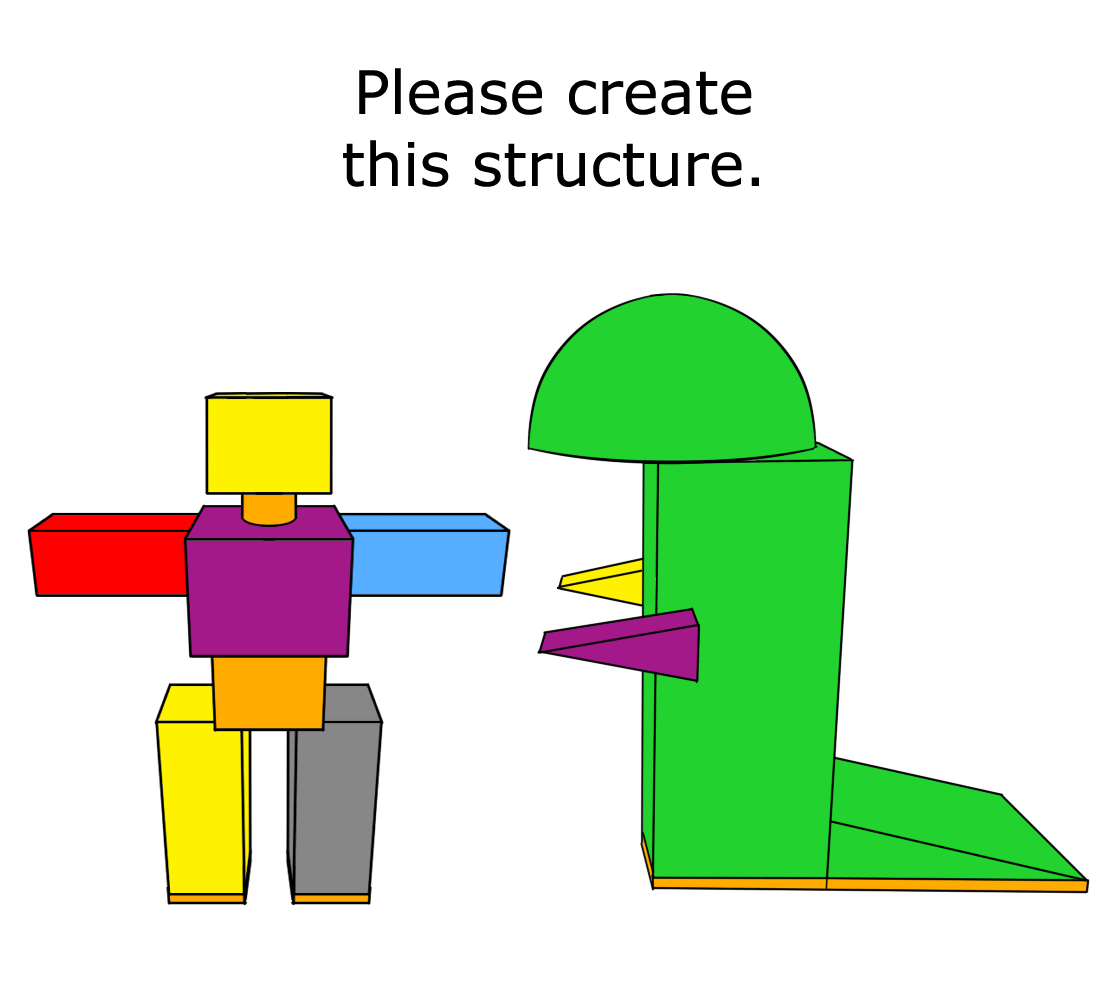

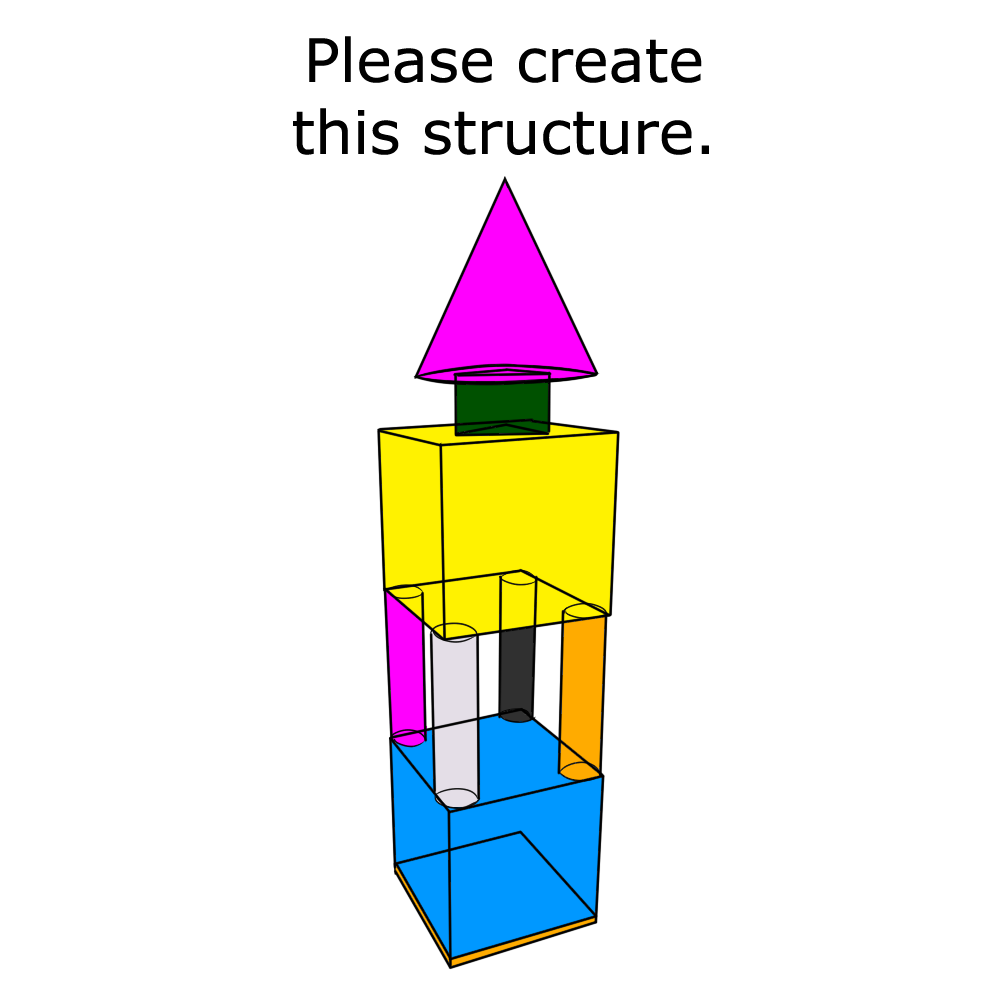

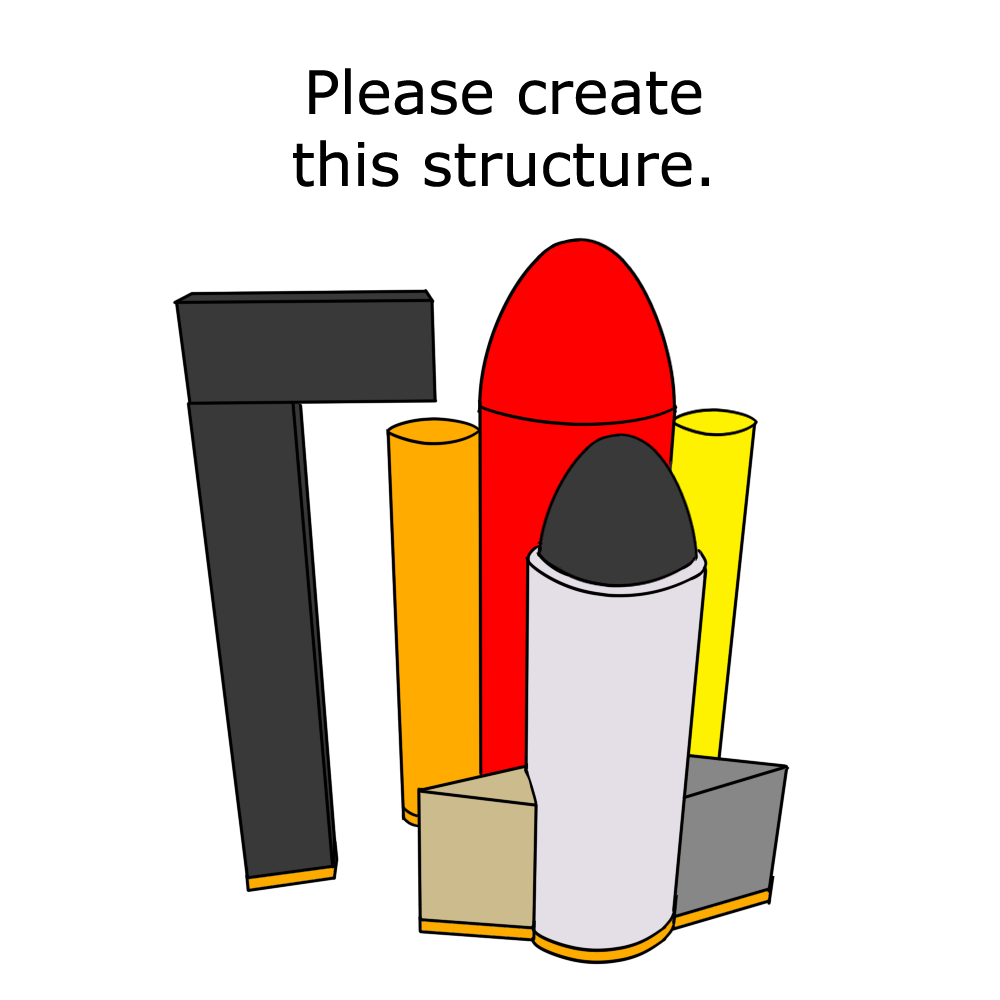

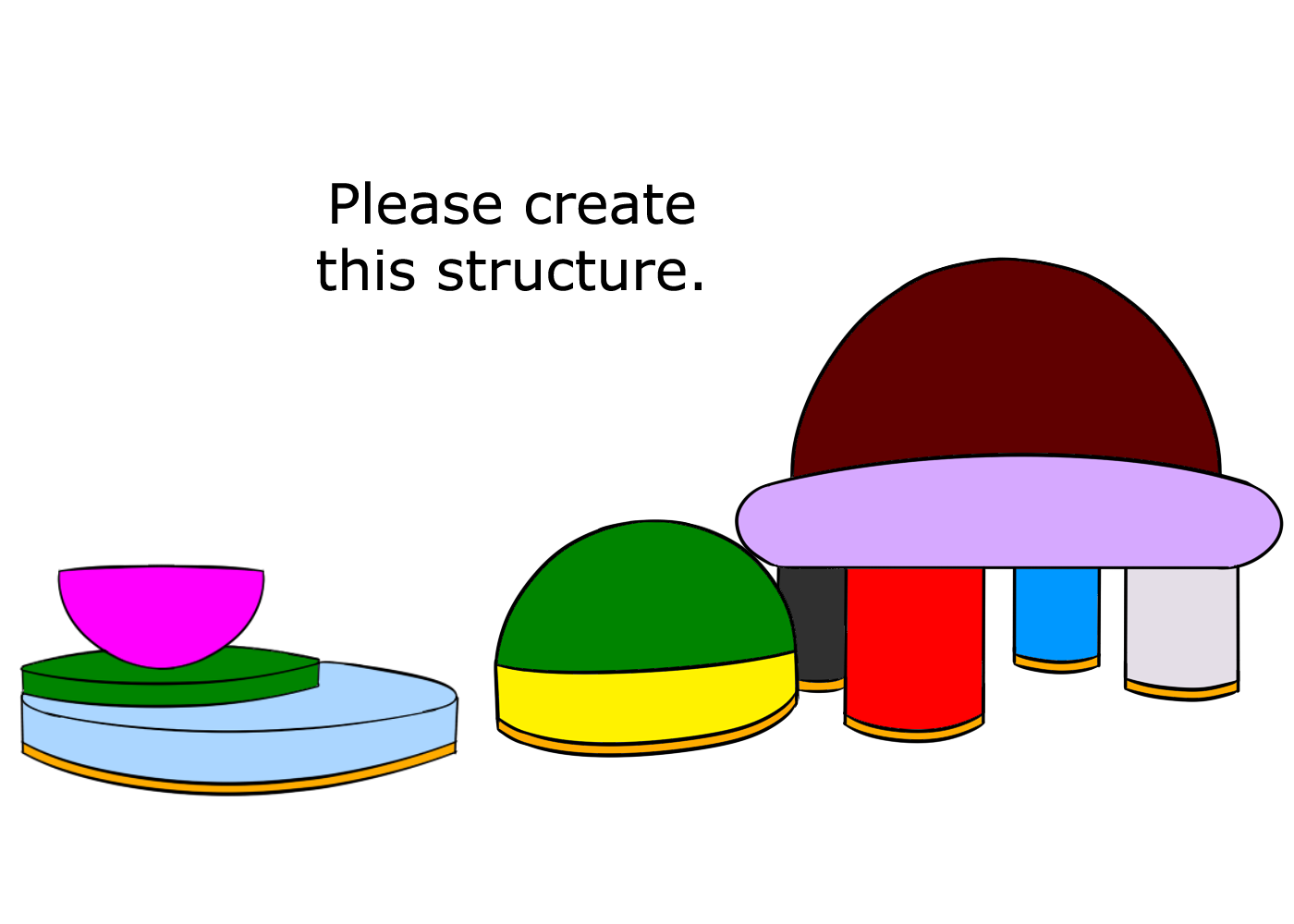

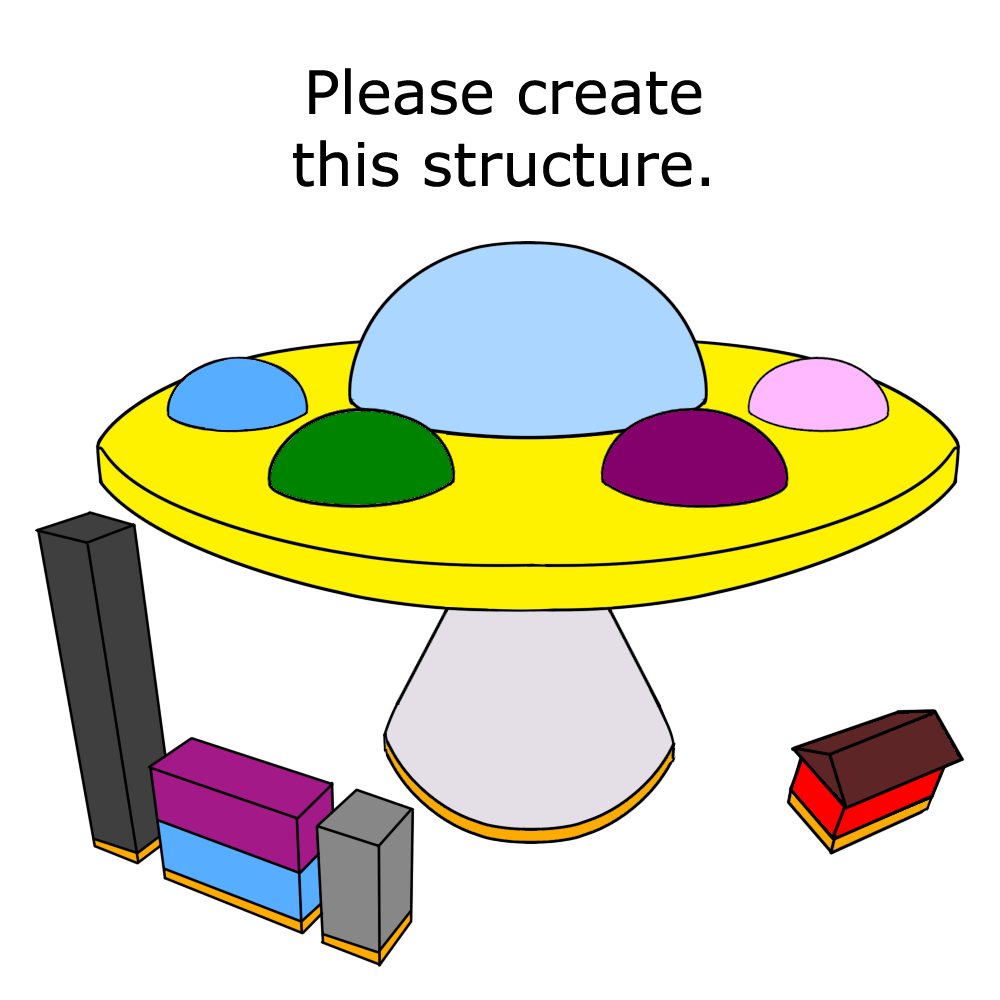

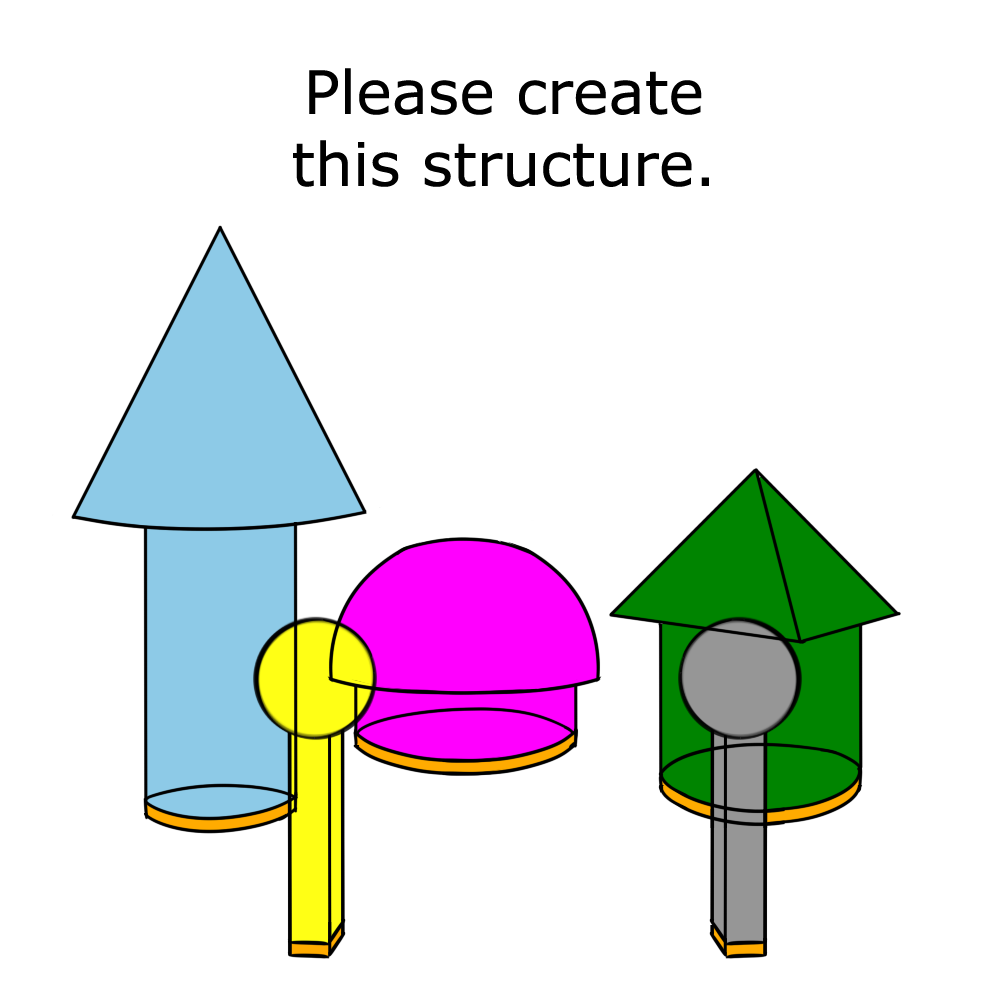

I sketched appealing concepts for whimsical sculpture ideas, asking my 8-person research and development team for additional feedback. I aimed for the completed puzzles to be quickly recognizable structures, and individual toy blocks to be a variety of shapes and sizes to simulate the potential diversity of objects people handle on a daily basis.

To further define the puzzles, I simultaneously created digital illustrations and modeled the blocks in Maya, playing the 2D and 3D designs off each other to make sure each component’s shape was distinctive and clear in axis orientation to other pieces. I gave attention to colors to accommodate for color blindness and differentiation if a single puzzle had multiple blocks of the same shape.

The illustrations below show the three tutorial puzzles as well as the twelve puzzles used in the final case study.

Making Intuitive Interactions

Current commercial virtual reality applications allow tracking of hands, but little research has been conducted on user preference for motion tracked body parts versus controller input. Thus, I included in my application the ability to directly control hands versus using game controllers to observe how participants perceive their virtual hand in both cases.

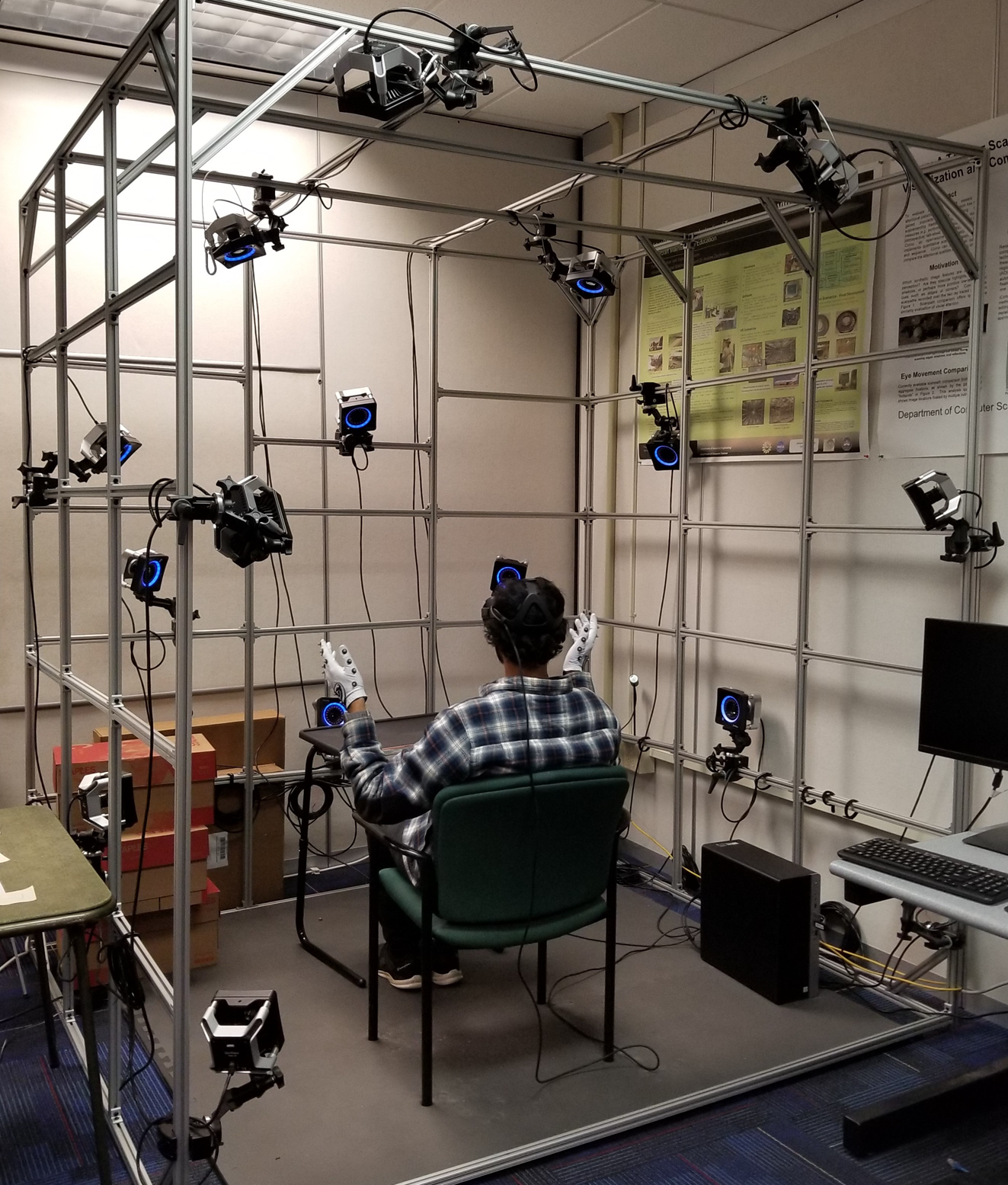

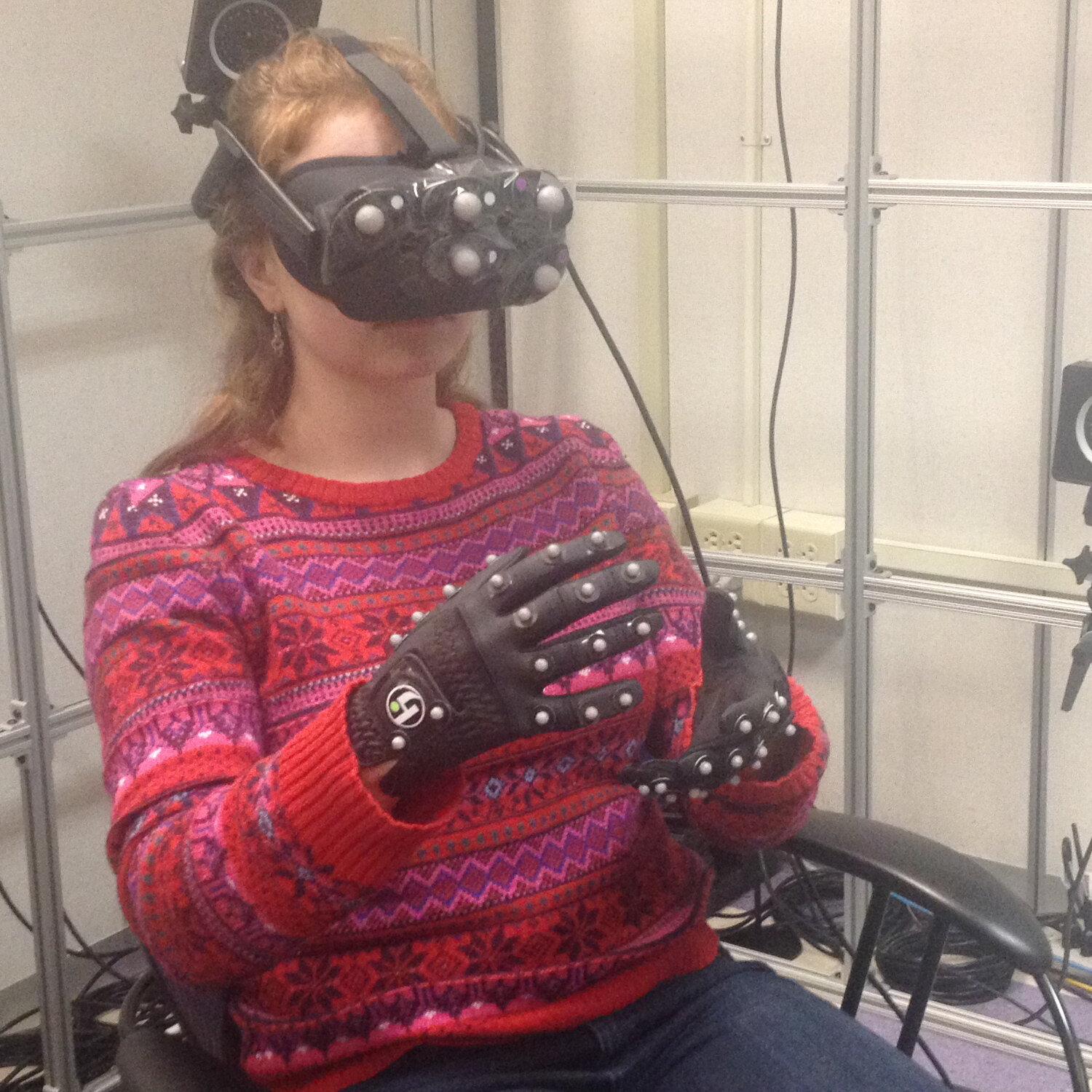

Participants can operate their avatar either by Oculus Touch Controller or Online Optical Marker-Based Hand Tracking. An OptiTrack motion capture system consisting of 16 cameras records the motion tracking for the gloves.

With the external interaction modalities in place, I began testing in-game mechanics during creation of the 3D models, openly taking into account suggestions from my team and pilot users as the project developed.

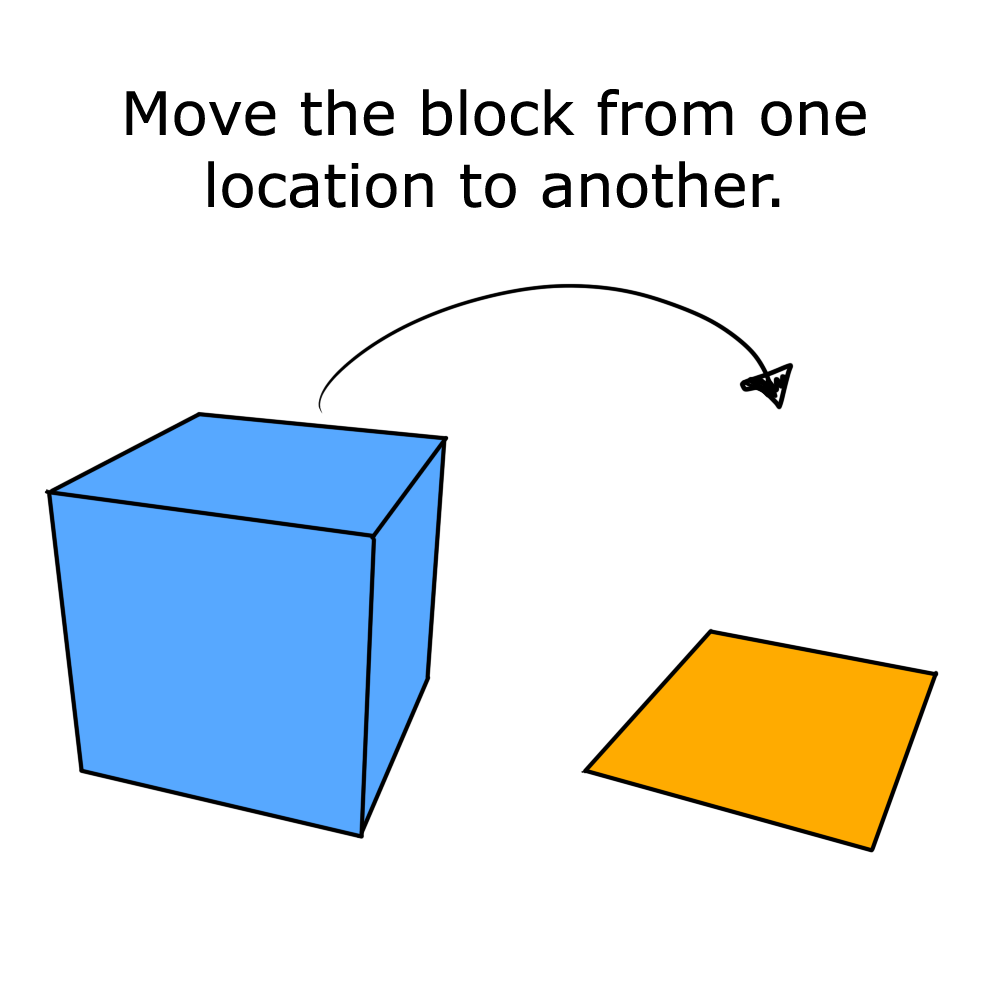

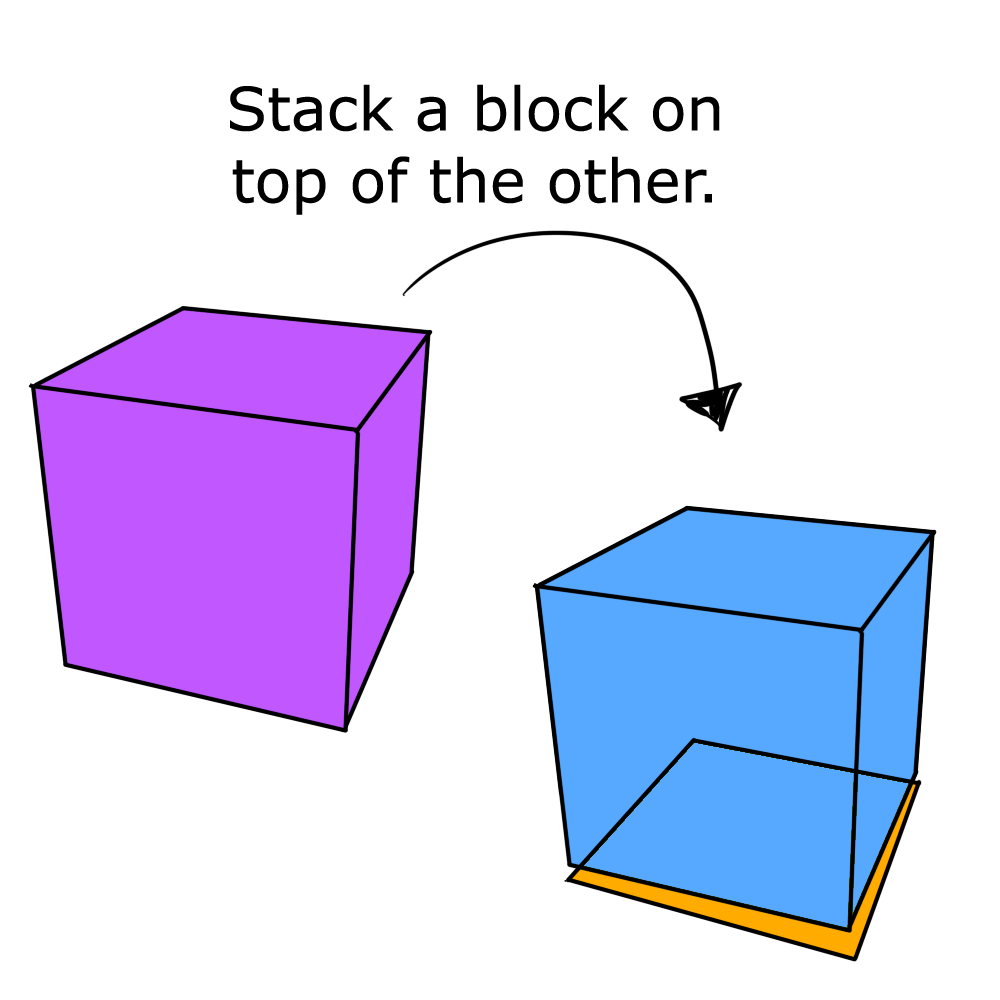

Application mechanics are as follows: When new puzzles are introduced, their blocks are randomly distributed in reachable space in front of users. Blocks turn semi-transparent when they are within reach of being picked up, and make a small noise when they are picked up. When a block comes into contact with another block it needs to be stacked on to progress the puzzle, the bottom block highlights in green; letting go of the held block then allows it to be snapped into place with a clicking noise. Gravity was not implemented so participants would not lose blocks easily, so if a block is let go of in mid-air, it stays in place until picked up again. Users tended to purposefully let go of the block they were holding while it was a short distance away from the block they wanted it to attach to, so I accommodated by making collision spaces larger than the 3D models so unnatural intersection of the blocks was not necessary for puzzle progression.

Research Design

The final layer of creation was designing and applying a research plan.

Following is the case study procedure: Participants begin with an introduction and demographic questionnaire. In VR, the avatar is calibrated to fit their body. Participants then experience the main experiment, completing each of 12 puzzles using either the glove or touch interface and with either small, fit, or large sized hands. At the end, qualitative feedback is collected.

Participants begin the virtual experiment in a calibration area, where the avatar is adjusted to fit their body. The torso and arm sizes are estimated based on the participant’s T-Pose while seated in the chair, and can be manually adjusted by the experimenter. The hand sizes are determined by the glove size worn by the participant.

The following images show gameplay in the Controller versus the Glove condition.

Participants receive a study questionnaire after each condition that replaces the puzzle instructions. To avoid accidental presses and provide rest time for participants’ arms, interaction with the questionnaire objects is disabled and participants read their answers aloud for the researcher to record.

The Result

My findings show that preference for appearance of hands that fit the user compared to larger hands; and stronger feelings of body ownership, realism, and user preference for direct hand control versus a handheld device.

The takeaway for developers is that choice of interaction modality should depend on the application: if realism and the intensity of the virtual hand illusion are important, direct hand control is recommended; if task efficiency is the main focus, controllers should be used. Participants gave an average rating of 5.7 out of 7 for interaction fun: thus, an interesting application has the potential to be fun and immerse players in any of the presented conditions.

The application is currently being used live for multiple user studies on avatar appearance and body ownership. Following is the PC Version for download:

Publication

Lin, L., Normoyle, A., Adkins, A., Sun, Y., Robb, A., Ye, Y., Di Luca, M., and Jörg, S. The Effect of Hand Size and Interaction Modality on the Virtual Hand Illusion. In 2019 IEEE Virtual Reality Conference.